| Tweet |

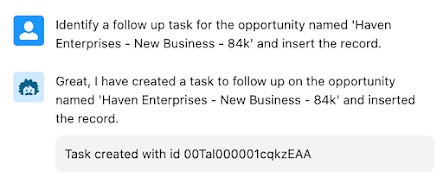

It's been a couple of weeks since Dreamforce and according to Salesforce there were 10,000 Agents built by the end of the conference, which means there's probably more than 20,000 now. One of those is mine, which I built to earn the Trailhead badge while waiting for the start of a Data Cloud theatre session and it did only take a few minutes.

So does this mean we need to sit back and prepare a the life of leisure while the Agents cater to our every whim?

The End of Humans + Salesforce?

Is this the end of human's working on/in Salesforce? Well not for some time in my opinion - these are very entry level tasks right now, and highly reliant on existing automation to do the actual work of retrieving or changing data. I suppose it's possible that eventually we'll end up with a perfect set of Agent actions (and supporting flows/Apex), but given we haven't achieved anything like that kind of reusability in the automation work that we've carried out over the last 20 odd years in Salesforce, it seems hopeful that we'll suddenly crack it. Even with the assistance of unlimited Agents. Any reports of our demise will be greatly exaggerated.

Fewer Humans + Salesforce?

This seems more plausible - not because of the current Agent capabilities displacing humans, but the licensing approach that Salesforce are taking. By moving away from per-seat licensing to per-conversation, Salesforce are clearly signalling that they expect to sell fewer licenses once Agentforce is available.

Again, I don't think we're anywhere close to this happening, but it's the direction of travel. What it probably will do is given organisations pause for thought when creating their hiring plans next year. Will quite so many entry level recruits be required compared to previous years?

Without Juniors, Where are the Seniors?

Something that often seems to be glossed over when talking about how generative AI will replace great swathes of the workforce is succession planning. Everyone who is now working as a Senior <anything> started out as a Junior <anything>, learned a bunch of stuff, gained experience and progressed. Today's Seniors won't last forever, there's a few years certainly, but eventually they'll run out of steam. And if there are no Juniors being hired, where do our crop of Seniors come from by 2034 and beyond?

It's likely to be one of two ways:

- We grow them differently. Using AI we can fast-track their experience and reduce the amount they have to learn and retain themselves. Rather than people organically encountering teachable moments in customer interactions, we'll engineer those scenarios using an "<insert role here> Coach". The coach will present as a specific type of customer in a specific scenario, receive assistance and then critique the performance.

- We don't need them, as the AI is so incredibly powerful that it exceeds the sum of all human knowledge and experience and can perform any job at any level.

Conclusion

No, I don't think Agentforce means the end of Human Capital in the Salesforce ecosystem. It does mean we need to do things differently though, and we shouldn't get so excited about the technology that we forget about the people.

Related Posts

- Trailhead - Quick Start: Build Your First Agent with Agentforce

- Youtube - Agentforce Keynote

- The Evil Co-Worker presents Evil Copilot - Your Untrustworthy AI Assistant

- Five Einstein Copilot Gotchas

- Formatted Output from Copilot Custom Actions

- Einstein Copilot - AI + (most of your) Data + CRM

- Chaining Copilot Custom Actions

- Einstein Copilot Custom Actions

- Einstein Prompt Templates in Apex - the Sales Coach

- Einstein Prompt Grounding with Apex

- Hands on with Salesforce Copilot

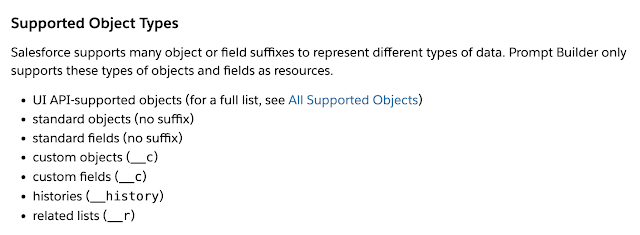

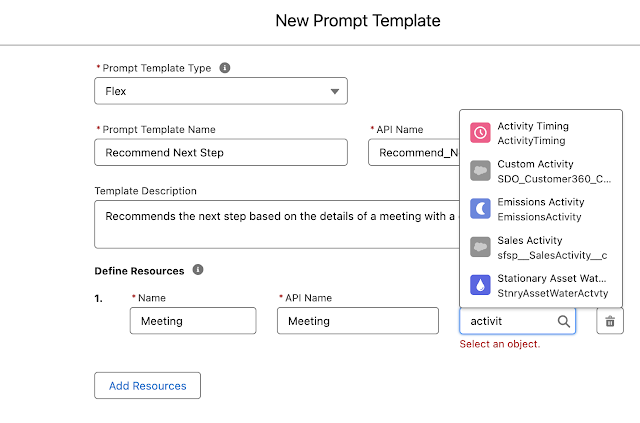

- Hands On with Prompt Builder