Introduction

Regular readers of this blog will know that I'm curious about the CPU time

consumed by various developer activities on the Salesforce platform - as I've

written before, developing at enterprise scale often turns into a Man Vs CPU

battle. One area I've been meaning to look into for a while is list sorting,

and it's been an interesting investigation.

Sorting Lists

Sorting sounds quite simple - if it's a list of primitives then you can

probably use the built-in List.sort() method. If not then you'll likely want

to implement the Comparable interface, which requires a single method:

Integer compareTo(Object compareTo)

This method compares the current object instance to the compareTo object

instance and returns +1 if this instance is the larger, -1 if it is the

smaller and 0 if both instances are the same. How you determine the result

depends on your specific business logic, but can also have a significant

effect on your request's CPU consumption, as the following scenarios will

show. To capture the CPU consumed, in each scenario I created a list with

10,000 elements using Math.random() to ensure as little ordering as possible

in the initial creation. I then sorted this list, capturing the CPU consumed

before and afterwards, with the log level turned right down. I wouldn't take

too much notice of the exact values, but the key point is the difference

between them. If you are interested in the exact implementation, click the link under each scenario title to see the code in the Github repository.

Primitive Sort

PrimitiveSort.cls

The baseline to compare against - this consumed 20 milliseconds of CPU.

Single Property

SimpleComparableSort.cls

A custom object containing a single Integer property and a compareTo method

that accessed this property directly on both instances. This consumed 336

milliseconds of CPU - a significant increase to access a single property.

Multiple Properties Combined

MultiComparableSort.cls

A custom object containing two Integer properties and a compareTo method that

accesses the properties directly, and multiplies them together before

comparing. This consumed 865 milliseconds of CPU - another significant

increase.

Multiple Properties Combined and Calculated in Advance

MultiComparableCalcSort.cls

A custom object containing two Integer properties that multiplies them

together and stores them in a third property which is used directly in the

compareTo method. This is an iteration on the MultiComparableSort custom

object scenario, and consumed 352 milliseconds, showing that it can be worth doing some

additional work in advance on the objects you are going to sort.

Method Call

MethodComparableSort.cls

A custom object containing an Integer property that is not public, requiring a

method to be executed to retrieve the value for the instance being compared

to. This consumed 773 milliseconds of CPU time, an increase of over 100% from

accessing the property directly.

Creating a public property containing a read only copy of the value for

sorting purposes would change this back to the performance of the

SimpleComparableSort class, bringing it back to 336 milliseconds. A slight

change to the object, but a big saving.

Multiple Methods Called and the Results Combined

MultiMethodComparableSort.cls

A custom object containing two private properties which must be multiplied

together to use in the compareTo method. In this case there are two methods

called every time compareTo is executed, pushing the CPU up to 1081 milliseconds Again, this could be improved by creating public read-only properties exposing

the values, or calculating them in advance and storing them in a single public

property.

SObject Comparing Two Fields

SObjectComparableSort.cls

A more complex scenario - a list of custom objects containing an Opportunity sObject that require a two

step comparison - first the stages are compared and if they are identical, the

amounts are then checked. The stage names are stored in a list and the

position in the list for each object determined using the indexOf method. This

consumed 3428 milliseconds of CPU - over 1/3 of that available to the entire

transaction.

SObject Comparing Two Fields - Calculate Stage Value

SObjectComparableIndexSort.cls

An iteration on the previous scenario - calculating the index for the stage when the custom object is constructed, rather than determining it on the fly in the compareTo method. This consumed 1911 milliseconds of CPU time. An improvement of just under 50% for very little effort.

SObject Comparing Two Fields - Calculate Value for Sorting

SObjectComparableCalcSort.cls

A further iteration on the previous scenario - calculating a unique value to represent the stage and the amount. This might not be a practical approach in many real world cases, but it's something to think about if you encounter problems. I've decided that my maximum opportunity value is five million, so I get the stage index and multiply it by one billion, then add on the opportunity amount. This allows me to revert back to sorting on a single property, and unsurprisingly brings the CPU consumed down to 349 milliseconds.

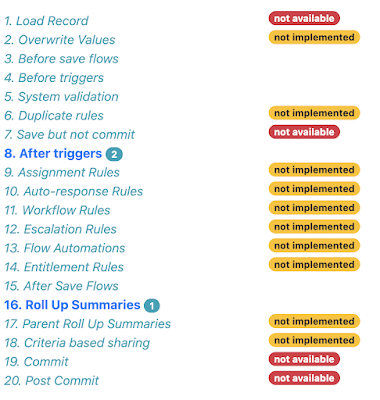

Understanding and Mitigating the CPU Impact

The reason for the significant increases with what feels like small increases in complexity is down to the sheer number of times that the compareTo method will be invoked on a decent sized list. It's easy to get into a mindset that this method is called once per object, or maybe a couple of times, but the truth is very different. In the scenarios above with a 10,000 item list, compareTo was called over 120,000 times, so the impact of adding a method call, accessing another property, or checking against multiple properties scales up really fast. If you are interested in reading more about why this is, check out Insertion sort or Selection sort for some walkthroughs of what actually happens during a sort.

The simplest way to mitigate this is to move as much work out of the compareTo method and do it up front, either at construction time or as a pre-sort activity. As an example from my scenarios above, replacing a method call with a public property requires 10,000 executions of the code to set up the property, rather than 120,000 executions of the method to access the private value. The real sweet spot is if you can generate a primitive that represents the ordinal value of the object, as sorting on that is far more efficient than comparing various aspects of the object.

Conclusion

The key takeaway here, as usual, is to think about the effect of the code that you are writing. If you are implementing Comparable, take a few minutes to profile the code with lists of reasonable size and see if you can do anything to reduce the impact. Most Salesforce instances start out simple with small data volumes, but it's remarkable how quickly the amount of data will scale and you need to make sure your code scales with it.

Related