| Tweet |

Introduction

In order to create and use GPTs, you need to be a ChatGPT Plus subscriber at $20/month, although in the UK there's VAT to be added so it works out around £20/month. This also gives priority access to new features, the latest models and tools, faster response times and access even at peak times. I signed up just to try out GPTs though, as they looked like a world of fun.

The Replicant

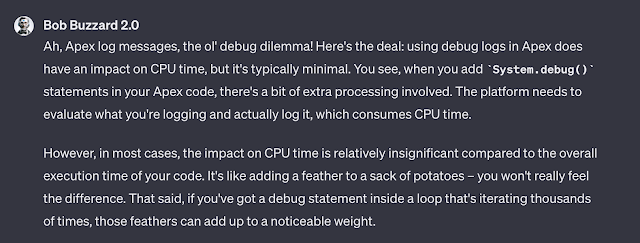

My first custom GPT is my replicant - Bob Buzzard 2.0. A GPT that has been pointed at most of my public and some of my private information. Instructed to respond as I would, you can expect irreverent or sarcastic responses as the mood takes (AI) me. Obviously very focused on Salesforce, and keen on Apex code.

Right now you'll need to be a ChatGPT Plus user to access custom GPTs, but if you are you can find Bob Buzzard 2.0 at : https://chat.openai.com/g/g-DOVc9phwC-bob-buzzard-2-0 Here's a snippet of a response from my digital twin regarding the impact of log messages on CPU - something I've investigated in detail in the past :

Creating GPTs

This is incredibly simple - you just navigate to the create page and tell it in natural language how you want it to behave, define the skills, point it at additional web sites or upload additional information. It's easy and requires no technical knowledge, which does make me wonder why they announced it at developer day given there's no development needed, but lets not tilt at that windmill.

A Couple of Warnings

First, remember that any private information that you upload to a GPT won't necessarily remain private. If you don't instruct your custom GPT to keep instructions and material private, it will happy share them on request.

Second, I've given the replicant a mischievous side - from time to time it will just gainsay your original decisions when you ask for help with specific problems, maybe suggesting you have picked the wrong Salesforce technology, or telling you to bin it all off and use another vendor. Think of this as your reminder that a human should always be involved in any decision making based on advice from AI.

I'm Going to be Rich?

Something else that was announced at Developer Day was revenue sharing - if people use Bob Buzzard 2.0 I'll get a slice of the pie. So does this mean I'm going to be rich? Like always, almost certainly not. As you just click a button and answer questions to create a GPT, there will be millions of them before too long. They are so easy to create that something a service like Salesforce development advice, with the vast amount of content already in the public domain, will be extremely competitive - an extremely crowded marketplace of similar products means everybody earns nothing.

That said, I think this is something that genuine creatives will be able to earn with. Rather than having their work used to train models that are can then be used to produce highly derivative works for close to free, they can create their own GPT and at least stand a chance of getting paid. Whether the earnings will be worth it we don't yet know, although history suggests the platform providers will keep everything they can.

Related Information

- The Einstein Trust Layer must become the Einstein Trust Platform

- Einstein Sales Emails

- Salesforce CLI OpenAI Plug-in - Function Calling

- Salesforce CLI OpenAI Plug-In - Generating Records

- Salesforce GPT - It's Alive!

- Get AI Ready - Prompt Engineering (BrightGen Webinar Recording)

- Get AI Ready - Prepare for AI Cloud (BrightGen webinar recording)

- Salesforce CLI OpenAI Plug-in

- Salesforce World Tour London : AI Day

- Predictions for Digital Life in 2035

- Einstein GPT - Rise of the Machines?