| Tweet |

SFDX and the Metadata API Part 4 - VSCode Integration

Introduction

In the previous instalments of this blog series I’ve shown how to deploy metadata, script the deployment to avoid manual polling and carry out destructive changes. All key tasks for any developer, but executed from the command line. On a day to day basis I, like just about any other developer in the Salesforce ecosystem, will spend large periods of the day working on code in an IDE. As it has Salesforce support (albeit still somewhat fledgling) I’ve switched over completely to the Microsoft VSCode IDE. The Salesforce extension does provide a mechanism to deploy local changes, but at the time of writing (Jan 2018) only to scratch orgs, so a custom solution is required to target other instances.

In the examples below I’m using the deploy.js Node script that I created in SFDX and the Metadata API Part 2 - Scripting as the starting point.

Sample Code

My sample class is so simple that I can’t think of anything to say about it, so here it is:

public with sharing class VSCTest1 {

public VSCTest1() {

Contact me;

}

}

and the package.xml to deploy this:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<Package xmlns="http://soap.sforce.com/2006/04/metadata">

<types>

<members>*</members>

<name>ApexClass</name>

</types>

<version>40.0</version>

</Package>

VSCode Terminal

VSCode has a nice built-in terminal in the lower panel, so the simplest and least integrated solution is to run my commands though this. It works, and I get my set of results, but it’s clunky.

VSCode Tasks

If I’m going to execute deployments from my IDE, what I’d really like is a way to start them from a menu or shortcut key combination. Luckily the designers of VSCode have foreseen this and have the concept of Tasks. Simply put, a Task is a way to configure VSCode with details of an external process that compiles, builds, tests etc. Once configured, the process will be available via the Task menu and can also be set up as the default build step.

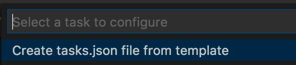

To configure a Task, select the Tasks -> Configure Tasks menu option and choose the Create tasks.json file from template option in the command bar dropdown:

Then select Others from the resulting menu of Task types;

This will generate a boilerplate tasks.json file with minimal information, which I then add details of my node deploy script to:

{

"version": "2.0.0",

"tasks": [

{

"label": “build",

"type": "shell",

"command": "node",

"args":["deploy.js"]

}

]

}

I then execute this via the Tasks -> Run Task menu, choosing ’build’ from the command bar dropdown and selecting 'Continue without scanning the task output'

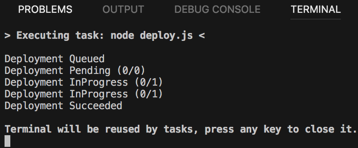

This executes my build in the terminal window much like, but saves me having to remember and enter the command each time:

Sadly I can’t supply parameters to the command when executing it, so if I need to deploy to multiple orgs I need to create multiple entries in the tasks,json file, but for the purposes of this blog let’s imagine I’m living a very simple life and only ever work in a single org!

Capturing Errors

Executing my command from inside VSCode is the first part of an integrated experience, but I still have to check the output myself to figure out if there are any errors and which files they are located in. For that true developer experience I’d like feedback from the build stage to be immediately reflected in my code. To capture an error I first need to generate one, so I set my class up to fail

public with sharing class VSCTest1 {

public VSCTest1() {

Contact me;

// this will fail

me.do();

}

}

VSCode Tasks can pick up errors, but it requires a bit more effort than simple configuration.

Tasks detect errors via ProblemMatchers - these take a regular expression to parse an error string produced by the command and extract useful information, such as the filename, line and column number and error message.

While my deploy script has access to the error information, it’s in JSON format which the ProblemMatcher can’t process. Not a great problem though, as my node script can extract the errors from the JSON and output them in regexp friendly format.

Short Diversion into the Node Script

As I’m using execFileSync to run the SFDX command from my deploy script, if the command returns a non-zero result, which SFDX does if there are failures on the deployment, it will throw an exception and halt the script. To get around this without having to resort to executing the command asynchronously and capturing the stdout, stderr etc, I simply send the error stream output to a file and catch the exception, if there is one. I then check the error output to see if it was a failure on deployment, in which case I just use that instead of the regular output stream or if it is a “real” exception, when I need to let the command fail. This is all handled by a single function that also turns the captured response into a JavaScript object:

function execHandleError(cmd, params) {

try {

var err=fs.openSync('/tmp/err.log', 'w');

resultJSON=child_process.execFileSync(cmd, params, {stdio: ['pipe', 'pipe', err]});

result=JSON.parse(resultJSON);

fs.closeSync(err);

}

catch (e) {

fs.closeSync(err);

// the command returned non-zero - this may mean the metadata operation

// failed, or there was an unrecoverable error

// Is there an opening brace?

var errMsg=''+fs.readFileSync('/tmp/err.log');

var bracePos=errMsg.indexOf('{');

if (-1!=bracePos) {

resultJSON=errMsg.substring(bracePos);

result=JSON.parse(resultJSON);

}

else {

throw e;

}

}

return result;

}

Once my deployment has finished, I check to see if it failed and if it did, extract the failures from the JSON response:

if ('Failed'===result.result.status) {

if (result.result.details.componentFailures) {

// handle if single or array of failures

var failureDetails;

if (Array.isArray(result.result.details.componentFailures)) {

failureDetails=result.result.details.componentFailures;

}

else {

failureDetails=[];

failureDetails.push(result.result.details.componentFailures);

}

...

}

...

}

and then iterate the failures and output text versions of them.

for (var idx=0; idx<failureDetails.length; idx++) {

var failure=failureDetails[idx];

console.log('Error: ' + failure.fileName +

': Line ' + failure.lineNumber +

', col ' + failure.columnNumber +

' : '+ failure.problem);

}

Back in the Room

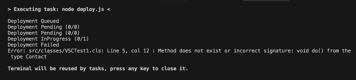

Rerunning the task shows an errors that occur:

I can then create my regular expression to extract information from the failure text - I used regular expressions 101 to create this. as it allows me to baby step my way through building the expression. Once I’ve got the regular expression down, I add the ProblemMatcher stanza to tasks.json:

"problemMatcher": {

"owner": "BB Apex",

"fileLocation": [

"relative",

"${workspaceFolder}"

],

"pattern": {

"regexp": "^Error: (.*): Line (\\d)+, col (\\d)+ : (.*)$",

"file": 1,

"line": 2,

"column": 3,

"message": 4

}

}

Now when I rerun the deployment, the problems tab contains the details of the failures surfaced by the script:

and I can click on the error to be taken to the location in the offending file.

There’s a further wrinkle to this, in that lightning components report errors in a slightly different format - the row/column in the result is undefined, but if it is known it appears in the error message on the following line, e.g.

Error: src/aura/TakeAMoment/TakeAMomentHelper.js: Line undefined, col undefined : 0Ad80000000PTL3:8,2: ParseError at [row,col]:[9,2]

Message: The markup in the document following the root element must be well-formed.

This is no problem for my task, as the ProblemMatcher attribute can specify an array of elements, so I just add another one with an appropriate regular expression:

"problemMatcher": [ {

"owner": "BB-apex",

...

},

{

"owner": "BB-lc",

"fileLocation": [

"relative",

"${workspaceFolder}"

],

"pattern": [ {

"regexp": "^error: (.*): Line undefined, col undefined : (.*): ParseError at \\[row,col\\]:\\[(\\d+),(\\d+)]$",

"file": 1,

"line": 3,

"column": 4,

},

{

"regexp":"^(.*$)",

"message": 1

} ]

}],

Note that I also specify an array of patterns to match the first and second lines of the error output. If the error message was spread over 5 lines, I’d have 5 of them.

You can view the full deploy.js file at the following GIST, and the associated tasks.json.

Default Build Task

Once the tasks.json file is in place, you can set this up as the default build task by selecting the Tasks -> Configure Default Build Task menu option, and choosing Build from the command drop down menu. Thereafter, just use the keyboard shortcut to execute the default build.