| Tweet |

Getting Started with Salesforce Wave

Introduction

Salesforce Wave, aka the Analytics Cloud, was the major new product launched at Dreamforce 14. Over the last month or so I’ve had a chance to try it out, thanks to the MVP program and the fact that I work for BrightGen, a Salesforce Platinum Partner here in the UK.

Setting Up

Setting up Wave is straightforward, mostly setting up and assigning permission sets, and covered in detail in the Salesforce Help.

Loading Data

Obviously its rather difficult to use an analytics product without data, and Wave provides a number of mechanisms to import your data. For the purposes of this post I’m ignoring the ETL providers (Informatica, Jitterbit, Mulesoft etc), instead I’m focusing on those provided by the platform or tools created by Salesforce.

The product is still pretty new, so there isn’t a particularly fancy UI for loading data yet, although I’m sure this will be coming shortly. Personally, this is the way I prefer things - I’d much rather get access to the functionality as soon as I can, rather than waiting for a funky user interface.

Importing a CSV File

The simplest way to load data is to import a CSV file. A good source of sample data is the Government Statistical data sets page. In this post I’m using the Land Registry Price Paid current month data, which provides 87,000 rows of data (Data produced by Land Registry © Crown copyright 2014). This only contains the raw data, so I need to add a header row at the top of the file based on the column headers. The first few column headers are shown below:

Navigate to the Analytics app home page and click the Create button and choose Dataset from the drop down (there might be other options depending on your permissions):

from the resulting page, choose the CSV option:

and select the file to upload :

Note that the upload section also mentions a JSON Metadata File - this is optional, but without it each column in the file will be assumed to be a dimension, which means that I’ll only be able to show the record count matching particular criteria, whereas I’m more interested in prices - for example, the total amount paid per county or the average for postcodes near me. Providing a schema file allows me to specify that the price column contains a measure by setting its type to ’Numeric’:

The full schema file can be accessed here. (I didn’t create this by hand, in case you were wondering, it was auto generated by a nifty tool that I’ll cover in a later blog post once the Analytics Cloud External API is out of pilot).

Finally, I specify the name for the dataset and the application (essentially a folder) to store it in - I’ve called this ‘December_Price_Paid’ and stored it in ‘My Private App’ - which is totally private to me, much like the private workspace in CRM Content.. Clicking Create Dataset uploads the file and queues it for processing - the message says that this can take up to an hour, but much depends on what other activity is taking place. I uploaded this on a Saturday morning and it took about 3 minutes to be processed.

Exploring a Dataset

Once the data set is loaded, it appears in the list of datasets for the specified application:

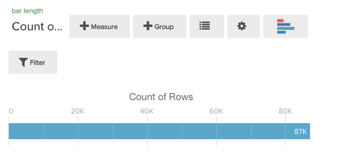

Clicking on the dataset takes me to the basic view, which displays the total number of records:

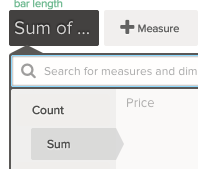

Nothing particularly interesting so far, but simply clicking on the ‘Measure’ and changing this to the sum of price paid:

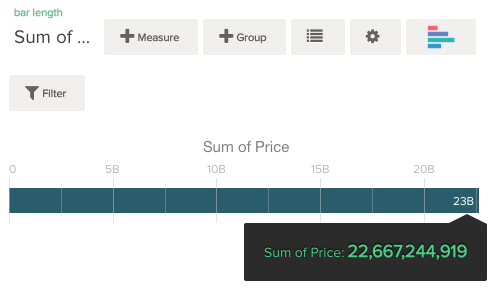

gives me the total spend for the month - nearly 23 Billion (did I mention the UK housing market is crazy?):

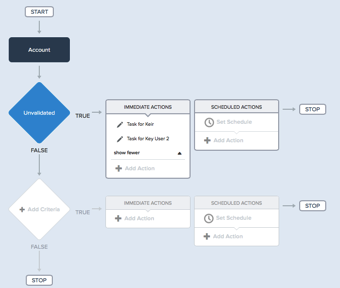

now I can start to see the real power of Wave - simply creating a grouping by County allows me to view the total spend per county in a couple of clicks and see the total spend for Essex, where I live:

I can then drill into Essex by district and change the measure to show the average price paid per property to find out how much people are prepared to pay to live here:

Now I could probably load this data into Salesforce and build out reports and dashboards to show the same data, but it would take me a lot longer than the few minutes I spent setting up this dataset and exploring it, plus if I wanted to look at the data slightly differently I’d need to build a new set of reports/dashboards, whereas with Wave I just return to the base dataset view and change my measures, grouping and filters.

What the screen shots above don’t do justice to is the speed of Wave, so here’s a real time video showing the steps I’ve described above - its pretty impressive, I’m sure you’ll agree, especially as this is taking place over an internet connection of 4.7 Mbps:

Note that all of the querying takes place on the server, so this involves genuine round trips - a testament to the power of the Wave query engine and the data compression that is achieved.