Image by DALL-E 3 based on a prompt from Bob Buzzard

Introduction

Thus far in my journey with Generative AI in Salesforce I've been focused on using prompt templates out of the box - sales emails, field generation, or in custom copilot actions. Salesforce isn't the boss of me though, so I'm also keen to figure out how to include prompts into my own applications.

The Use Case

I want a way for sales reps to get advice on what they should do with an opportunity - is it worth proceeding with, what does it make sense to do next, that kind of thing. I don't want this happening every time I load the page, as this will consume tokens and cost money, so I'm going to make it available as an LWC inside a tab that isn't the default on the opportunity page:

The Coach tab contains my LWC, but the user has to select the tab to receive the coaching. I could make this a button, but pressing buttons fulfils an innate desire for control and exploration in humans, so it could still end up consuming a lot of tokens. Opening tabs, for some reason, doesn't trigger the same urges.

The Implementation

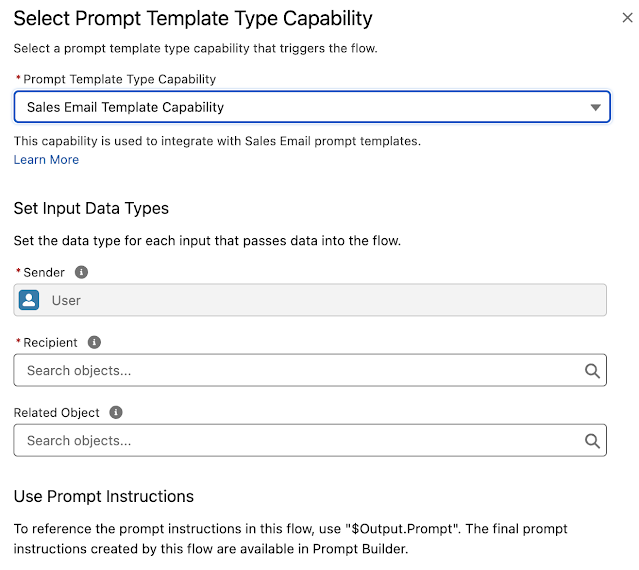

The first thing to figure out was how to "execute" a prompt from Apex - how to hydrate the prompt with data about an opportunity, send it to a Large Language Model, and receive a response. This is achieved via the Apex ConnectAPI namespace. It's a bit of a multi-step process, so you should be prepared to write a little more code than you might expect.

Prepare the Input

The prompt template for coaching takes a single parameter - the id of the Opportunity the user is accessing. Prompt templates receive their inputs via a ConnectApi.EinsteinPromptTemplateGenerationsInput instance, which contains a map of ConnectApi.WrappedValue elements keyed by the API name of the parameter from the prompt template. The ConnectApi.WrappedValue is also a map though, in this case with a single entry of the opportunity id with the key value 'id'. As mentioned, a little more code than you might have expected to get a single id parameter passed to a prompt, but nothing too horrific:

ConnectApi.EinsteinPromptTemplateGenerationsInput promptGenerationsInput =

new ConnectApi.EinsteinPromptTemplateGenerationsInput();

Map<String,ConnectApi.WrappedValue> valueMap = new Map<String,ConnectApi.WrappedValue>();

Map<String, String> opportunityRecordIdMap = new Map<String, String>();

opportunityRecordIdMap.put('id', oppId);

ConnectApi.WrappedValue opportunityWrappedValue = new ConnectApi.WrappedValue();

opportunityWrappedValue.value = opportunityRecordIdMap;

valueMap.put('Input:Candidate_Opportunity', opportunityWrappedValue);

promptGenerationsInput.inputParams = valueMap;

promptGenerationsInput.isPreview = false;

Make the Request

ConnectApi.EinsteinPromptTemplateGenerationsRepresentation generationsOutput =

ConnectApi.EinsteinLLM.generateMessagesForPromptTemplate('0hfao000000hh9lAAA',

promptGenerationsInput);

Extracting the Response

Putting it in front of the User

@api get recordId() {

return this._recordId;

}

set recordId(value) {

this._recordId=value;

GetAdvice({oppId : this._recordId})

.then(data => {

this.thinking=false;

this.advice=data;

})

Note that I have to do this via an imperative Apex call rather than using the wire service, as calling an Apex ConnectAPI method consumes a DML call, which means my controller method can't be cacheable, which means it can't be wired.

The user receives a holding message that the coach is thinking:

Then after a few seconds, the Coach is ready to point them in the right direction:

Related Posts

- Einstein Prompt Grounding with Apex

- Hands on with Salesforce Copilot

- Hands On with Prompt Builder

- Salesforce Help for Prompt Builder

- Salesforce Admins Ultimate Guide to Prompt Builder